I will say something controversial.

It has gone on long enough. It is time to bring back sanity to the rules and regulations surrounding the entire pantomime that is the COVID-19 management in SIN city. The political capital that had been previously amassed has already been spent, and it is all going downhill there---there will be other ways of regaining the lost political capital some time in the future, way before the next election, I'm sure; they will always find a way to do so.

So there is no excuse to not bite the bullet and do the right thing now.

What is the right thing then? Instead of throwing money towards various ``support schemes'' to keep entire industry sectors in the thrall of the government (and thus justifying the need to increase taxes in the near future to make up for the shortfall from ``emergency spending''), work towards reducing the many rules that restrict movement so that people can start to organically support the various businesses that are on these ``support schemes''---this means actually planning for an exit rather than just taking knee-jerk reactions. I know that there is a crisis to manage, but I would be disappointed if the powers that be had not set aside a skeleton crew (or even planned time among the existing task force) to actually think about how our exit might look like, to define the end conditions of what constitutes a ``successful management of the COVID-19 crisis in SIN city'', and to communicate it to the general public. Without a defined end-state that everyone is made aware of, there is simply no way to proceed, and the task force might as well be formalised as yet another ministry instead since it will essentially exist ``forever'' as its mission is never complete.

Instead of focusing on trying to regain global reputation through being overtly friendly to those outside of SIN city at the expense of neglecting the residents of SIN city, focus on rebuilding the local community towards actually being self-responsible and making their own risk assessments. The vaccination rate of SIN city residents are already exceedingly high, and after two long years, COVID-19 isn't going anywhere, ever. Standardise the restrictions to ensure consistency between what is allowed locally, and what is allowed under the MICE events. Foreign capital will always appear sweet, but without the backing of the residents who are the value adders of these capital, what's the point of having so much capital? It will simply come, realise that it is all a sham, and then leave as quickly. The rise and fall of various national-level initiatives that involve foreign investment are but a demonstration of this ``easy come, easy go'' nature---businesses have no morality nor responsibility to anyone and anywhere they go, save for their profits. I know that SIN city has mostly been an entrpôt for most of its history, but come on, residents (permanent, or even citizens) actually exist and they are backbone of the economy. The whole incapability of the healthcare sector to weather all cases is a long term problem that has been overlooked for too long---take that as an existing limitation, formulate a better strategy to keep those who are not actually that ill to stay home, and remember to strengthen this sector in the future (it's a technical debt that needs to be paid for). On that note, the changes in regulation to allow those who are less ill to convalesce at home did arrive, but damn it came late.

COVID-19 was a shake-up that could allow a rewriting of our social norms to make it more resilient, to bring back more of that gotong royong that was alluded to as being the key success factor of early post-war SIN city. Instead, we get a people who are afraid of their own shadow, seeing danger at every corner, misfed with misinformation, thrown into chaos from the myriads of rules that seem to be unprincipled, stressed out and tired with little to no end in sight, while developing stronger anti-social attitudes that can undermine everyone's safety and right to living all at once as it all comes together.

If the true purpose was to reshape a people to remove any last vestiges of discernment to make them more pliable and serf-like, then perhaps the powers that be have succeeded well. The only thing lacking is probably a social credit system, but with all the so-called ``digitalisation'' going on at the national level, it is a matter of time before some watershed event kicks in to give enough political momentum to bring it online---it takes a strong and principled government to not want to do that with all that tempting data that is already collected. I mean, we are already lugging around a tracking beacon under the TraceTogether programme, and it has suffered at least one mission creep from only just enabling contact tracing to a shibboleth for basic living activities under the SafeEntry programme due to its gate-keeping nature of demonstrating vaccination status, with no end in sight.

I will eat my words on the day when an official announcement of a specific deadline for the retiring of the TraceTogether programme; frankly, I'm not going to hold my breath on this one.

That's all for now. Also note that this is the last post for October---all the October posts have titles that begin with `M'. It was tricky to figure out what title to use each time, but it all turned out well. Consider it a type of warm up for the NaNoWriMo that kickstarts tomorrow.

Till the next update.

An eclectic mix of thoughts and views on life both in meat-space and in cyber-space, focusing more on the informal observational/inspirational aspect than academic rigour.

Sunday, October 31, 2021

Saturday, October 30, 2021

Meeting My Daily Writing Requirements

I filed a support request regarding the fan vibration. The sound was getting bad---it had scraping elements in it, and was triggering at random moments as well. Terrifying. It'll be a while before they will get back to me though, considering that it is a weekend after all.

I hope that they can replace fan assembly so that new bearings and other good stuff are in place.

An Ethic for Christians and Other Aliens in a Strange Land by William Stringfellow is starting to get more interesting. It covers largely Revelation from the Bible, and uses it as a way of talking about Christian ethics while still living in this fallen world [of Babylon]. This is in contrast with the more predictive nature of God's Prophetic Blueprint by Dr Bob Shelton. I don't think that either is ``a more correct interpretation'' than the other, mostly because Revelation itself has strong eschatological components to it that is less ``targetted'' like the epistles that make up most of the New Testament, but also because each uses slightly different approaches that come to conclusions that are broadly in agreement (as they should be since they are sourcing from the same Bible). But I will defer discussion till after I've finished An Ethic for Christians and Other Aliens in a Strange Land.

Tomorrow will be the last day before NaNoWriMo kicks in. I think I'll watch me some Jump King gameplay by Calliope Mori while going ham with Serious Sam 3: BFE and watching the temperature settings---I've had to lie Eileen-II flat on the desk without the support struts because I didn't want to risk more excessive vibrations. I had taken apart the back cover again this morning and added more vaseline as lube---it seemed to work well as an interim measure, as long as it doesn't dry up. I think the vibration problem came back since the last application because I was running the fans at full speed for most of yesterday, figuring out that I could probably try to get more cooling going just so that I can squeeze out more turbo boosted frequencies for longer.

Ah well. Stupid lousy hot and humid weather that is SIN city. Can't do much about that.

Till tomorrow's update then.

I hope that they can replace fan assembly so that new bearings and other good stuff are in place.

An Ethic for Christians and Other Aliens in a Strange Land by William Stringfellow is starting to get more interesting. It covers largely Revelation from the Bible, and uses it as a way of talking about Christian ethics while still living in this fallen world [of Babylon]. This is in contrast with the more predictive nature of God's Prophetic Blueprint by Dr Bob Shelton. I don't think that either is ``a more correct interpretation'' than the other, mostly because Revelation itself has strong eschatological components to it that is less ``targetted'' like the epistles that make up most of the New Testament, but also because each uses slightly different approaches that come to conclusions that are broadly in agreement (as they should be since they are sourcing from the same Bible). But I will defer discussion till after I've finished An Ethic for Christians and Other Aliens in a Strange Land.

Tomorrow will be the last day before NaNoWriMo kicks in. I think I'll watch me some Jump King gameplay by Calliope Mori while going ham with Serious Sam 3: BFE and watching the temperature settings---I've had to lie Eileen-II flat on the desk without the support struts because I didn't want to risk more excessive vibrations. I had taken apart the back cover again this morning and added more vaseline as lube---it seemed to work well as an interim measure, as long as it doesn't dry up. I think the vibration problem came back since the last application because I was running the fans at full speed for most of yesterday, figuring out that I could probably try to get more cooling going just so that I can squeeze out more turbo boosted frequencies for longer.

Ah well. Stupid lousy hot and humid weather that is SIN city. Can't do much about that.

Till tomorrow's update then.

Friday, October 29, 2021

Micro-update

No words today to spare. Saving them up for when NaNoWriMo begins.

Only thing I would say is, the fan in Eileen-II was acting up again, and I re-applied as much Vaseline as I could squeeze with a toothpick. It seems to work well so far, even when running under the ``Full Power'' profile that basically pushed the fan speeds to 100%.

I'm going to take a shower and then turn in for the night. The day has been quite long. Till the next update then.

Only thing I would say is, the fan in Eileen-II was acting up again, and I re-applied as much Vaseline as I could squeeze with a toothpick. It seems to work well so far, even when running under the ``Full Power'' profile that basically pushed the fan speeds to 100%.

I'm going to take a shower and then turn in for the night. The day has been quite long. Till the next update then.

Thursday, October 28, 2021

Mini Update

NaNoWriMo season is going to be upon us very soon. I can't wait to dump all the negativity that I have been accumulating throughout the whole year into this one novel as a type of mental purge.

It's sort of like what happened in 2013, where I completed the entry, but I did not put it up for download. I am tempted to do the same for this year's entry. I mean, with a title like 50 Ways to Die, what would you be expecting me to write?

I cropped my hair down again today. It was starting to get long enough that under the heat, the perspiration was getting very discomforting.

And that's about it. I've been playing a bit more of Serious Sam: Before the First Encounter. I find that as I get older, I can't play first person shooter games in long stretches any more. Not sure why, it's just a thing. I remembered that I could easily play 8--9 hours of Doom, Duke Nukem 3D, or even Shadow Warrior. Now... I'd be lucky if I can even get one hour non-stop.

No working on LED2-20 yet... it's getting to the part that requires quite a bit of concentration and more assets to set things up for testing.

Till the next update then.

It's sort of like what happened in 2013, where I completed the entry, but I did not put it up for download. I am tempted to do the same for this year's entry. I mean, with a title like 50 Ways to Die, what would you be expecting me to write?

I cropped my hair down again today. It was starting to get long enough that under the heat, the perspiration was getting very discomforting.

And that's about it. I've been playing a bit more of Serious Sam: Before the First Encounter. I find that as I get older, I can't play first person shooter games in long stretches any more. Not sure why, it's just a thing. I remembered that I could easily play 8--9 hours of Doom, Duke Nukem 3D, or even Shadow Warrior. Now... I'd be lucky if I can even get one hour non-stop.

No working on LED2-20 yet... it's getting to the part that requires quite a bit of concentration and more assets to set things up for testing.

Till the next update then.

Wednesday, October 27, 2021

Mumbles About Fear

Fear. Everyone has it in one form or another, whether or not one is aware of it or not. Fear is something that is deep within, and is a manifestation of either weakness or uncertainty. Those who claim to be fearless are either naive (by choice or otherwise), or extremely certain of the outcome.

Generally speaking, there are few outcomes that are certain. Physical death, for example, is usually quite certain---there's no coming back from that for the most part. That's why those who have terminal illnesses (physical or otherwise) for example, after a period of grief and denial, eventually become fearless upon acceptance.

But I'm in no position to talk about fearlessness. Today I want to talk about fear itself.

Most fear uncertainty for the simple observation that uncertainty has a tendency to be unbounded unless extra thought is applied to apply mitigating factors that can reduce the range of uncertainty. This is what preparation usually entails---taking control over what we can control and doing the best we can in preparing for the eventualities that we can reasonably figure out. Whatever is left will still be uncertain, but at the very least that level of uncertainty is bounded. For those who want to further reduce their fear of the uncertain, they can pray and trust in God to take care of whatever else that they cannot take care, particularly so if they are believers. While there is never a firm guarantee that the outcome will be well for the person himself/herself (if the outcome can be guaranteed, it is no longer uncertain by definition), at least the believer can have faith in the actions of their righteous God who can do no wrong by definition.

As for fear stemming from weakness... I think that it is a reaction that comes from those who did not proverbially ``do their work to earn their bones''. If one had undergone the necessary steps rigorously to learn a skill or to come to power, without relying on shortcuts but instead go through a trial by fire to earn their skill or have their power invested into them by others, then one is less likely to feel that one is weak, imposter syndrome notwithstanding. Part of that reaction is based on the seeming interpretation of what others think about one, whether it is peers, or a more senior person, or even other governments.

Newsflash: no one on this wide Earth knows what they are doing, and everyone is making things up as they go along. The harshest critics of ourselves are ourselves, because we see ourselves every day, and we remember all the seemingly weak things that we have done compared to any other person. Only God knows what is going on at all times, and the rest of us just do what we can. Now while I said what I said, I want to point out that this doesn't mean that there are no degrees to the ``correctness'' of the things that people are making up as they go along---that's what the ``exercising of one's judgement'' means. The ``judgement'' here is more ``discernment'' than ``pronouncement of whether a person is righteous or not'', and it should be applied to the courses of actions that one can take as opposed to applying it to others.

I raise this issue on fear here because I had been thinking about the whole competitive nature of things, as well as how some governments get so thin-skinned over criticism on the ways that they do things. A government that gets its mandate of governance through the direct support of the people as opposed to being parachuted into their position tends to be one that has higher levels of trust from the people, and thus have a tendency to be less likely to be fearful of any critics---they know that they have the unpressured support of the people, and are thus freed up to concentrate on doing the right thing instead of just appearing to do so. Unfortunately, in many systems of government, the process in which the mandate of governance is obtained has been corrupted into a byzantine one that naturally favours the incumbent instead of the one that is actually good for the people. And when the results are not sufficiently favourable to the incumbent (despite winning), the process gets further mangled to bias more towards the incumbent in the next round.

Fear does power a lot of vicious cycles. Arms races are the most obvious ones, and so is the more recent ``race to zero'' economic races in some sectors of industry. A key leveller that the people of old didn't have then that we do now is fast global communications. Information can flow from one part of the planet to the other some how, and with it comes the ability for those who are oppressed under the fear to band together in solidarity and create their own bloc to push back. How they push back though will determine if they end up assauging the fear that the oppressors feel, or exacerbating it causing an even larger backlash.

Humanity's greatest strength [of banding together] is also its greatest weakness, because those of the like amplify their own perceptive reality. Truly homogeneous societies amplify their perceptive realities much faster and stronger, but not necessarily for the better. A better understanding might lead to the reduction of uncertainty, and allowing systems that promote capability independent of socio-politico-economic ability can produce people more certain of their strength and less worried about weaknesses imaginary or otherwise. But these are the ideal---I doubt that we can achieve this in real life.

And so fear will still rule this world and drive much of the associated suffering from the many vicious cycles.

Generally speaking, there are few outcomes that are certain. Physical death, for example, is usually quite certain---there's no coming back from that for the most part. That's why those who have terminal illnesses (physical or otherwise) for example, after a period of grief and denial, eventually become fearless upon acceptance.

But I'm in no position to talk about fearlessness. Today I want to talk about fear itself.

Most fear uncertainty for the simple observation that uncertainty has a tendency to be unbounded unless extra thought is applied to apply mitigating factors that can reduce the range of uncertainty. This is what preparation usually entails---taking control over what we can control and doing the best we can in preparing for the eventualities that we can reasonably figure out. Whatever is left will still be uncertain, but at the very least that level of uncertainty is bounded. For those who want to further reduce their fear of the uncertain, they can pray and trust in God to take care of whatever else that they cannot take care, particularly so if they are believers. While there is never a firm guarantee that the outcome will be well for the person himself/herself (if the outcome can be guaranteed, it is no longer uncertain by definition), at least the believer can have faith in the actions of their righteous God who can do no wrong by definition.

As for fear stemming from weakness... I think that it is a reaction that comes from those who did not proverbially ``do their work to earn their bones''. If one had undergone the necessary steps rigorously to learn a skill or to come to power, without relying on shortcuts but instead go through a trial by fire to earn their skill or have their power invested into them by others, then one is less likely to feel that one is weak, imposter syndrome notwithstanding. Part of that reaction is based on the seeming interpretation of what others think about one, whether it is peers, or a more senior person, or even other governments.

Newsflash: no one on this wide Earth knows what they are doing, and everyone is making things up as they go along. The harshest critics of ourselves are ourselves, because we see ourselves every day, and we remember all the seemingly weak things that we have done compared to any other person. Only God knows what is going on at all times, and the rest of us just do what we can. Now while I said what I said, I want to point out that this doesn't mean that there are no degrees to the ``correctness'' of the things that people are making up as they go along---that's what the ``exercising of one's judgement'' means. The ``judgement'' here is more ``discernment'' than ``pronouncement of whether a person is righteous or not'', and it should be applied to the courses of actions that one can take as opposed to applying it to others.

I raise this issue on fear here because I had been thinking about the whole competitive nature of things, as well as how some governments get so thin-skinned over criticism on the ways that they do things. A government that gets its mandate of governance through the direct support of the people as opposed to being parachuted into their position tends to be one that has higher levels of trust from the people, and thus have a tendency to be less likely to be fearful of any critics---they know that they have the unpressured support of the people, and are thus freed up to concentrate on doing the right thing instead of just appearing to do so. Unfortunately, in many systems of government, the process in which the mandate of governance is obtained has been corrupted into a byzantine one that naturally favours the incumbent instead of the one that is actually good for the people. And when the results are not sufficiently favourable to the incumbent (despite winning), the process gets further mangled to bias more towards the incumbent in the next round.

Fear does power a lot of vicious cycles. Arms races are the most obvious ones, and so is the more recent ``race to zero'' economic races in some sectors of industry. A key leveller that the people of old didn't have then that we do now is fast global communications. Information can flow from one part of the planet to the other some how, and with it comes the ability for those who are oppressed under the fear to band together in solidarity and create their own bloc to push back. How they push back though will determine if they end up assauging the fear that the oppressors feel, or exacerbating it causing an even larger backlash.

Humanity's greatest strength [of banding together] is also its greatest weakness, because those of the like amplify their own perceptive reality. Truly homogeneous societies amplify their perceptive realities much faster and stronger, but not necessarily for the better. A better understanding might lead to the reduction of uncertainty, and allowing systems that promote capability independent of socio-politico-economic ability can produce people more certain of their strength and less worried about weaknesses imaginary or otherwise. But these are the ideal---I doubt that we can achieve this in real life.

And so fear will still rule this world and drive much of the associated suffering from the many vicious cycles.

Tuesday, October 26, 2021

Making Sense of the Rules of the Internet

To make up for the low-effort posts of the past few days, let me do a commentary on The Rules of the Internet that I had alluded to way back in August.

Here we go:

Till the next update.

Here we go:

- Don't f**k with cats. If there's one thing that the majority of the Internet agrees with, it's that cats are cool, and by extension, other [cute] animals are cool too. So don't be a dick and harm them.

- You don't talk about /b/. We don't talk about /b/. By extension, we don't talk about the online groups that we may/may not associate ourselves with in meat-space.

- You DON'T talk about /b/. We DON'T talk about /b/. This is important enough that it bears repetition.

- We are Anonymous. The fundamental rule of the Internet is that there are personalities, but there is never a clear mapping between personalities on the Internet and real-world people. Anonymity is a built-in nature of the Internet, so don't let the big corporations fool you into thinking that somehow it is necessary to identify one's real-world persona with any personality. In fact, it can get dangerous to do so if we look at the later rules.

- We are legion. For every personality we see on the Internet, there are many more lurking in the background---there is no way to tell just how many are there. Never assume that the single handle that one sees is associated with only one person---it could be more, or it could even be none (automated shill).

- We do not forgive, we do not forget. Since personalities on the Internet are anonymous and legion, there will always be at least one person who remembers, and there is always at least one person who will hold a grudge---it is wise to remember these even as one tries to participate.

- /b/ is not your personal army. A ``personal army'' is like an activist group of sorts that can advance one's agenda. It comes directly from rules 3, 4, and 5, really. In short, don't treat any [loose] association group on the Internet as a potential ally to advance one's personal causes. So all those ``woke'' groups (and ultra-conservative ``no-chill'' groups too) are making the mistake of thinking that those Internet groups they created are their ``personal army''. Spoilers: It will backfire.

- No matter how much you love debating, keep in mind that no one on the Internet debates. Instead they mock your intelligence as well as your parents. Ho ho ho... this one's a stinger. It's easy to forget this rule in the Social Media Age, because we get this false equivalence of a person and an online personality due to the hasty conclusion that since a [profile] picture and a ``real'' name can be associated with the online personality, they are therefore ``real'' people who are willing to listen and act like how ``real'' people would do in meat-space. This rule completely disabuses that notion. Debates occur on the Internet under two specific conditions: it's a tight group where every personality present has an associated reputation that everyone in the group knows, and the point under discussion is on a very narrowly defined topic that is of interest of the tight group. And even then, the debate that occurs is like how one would normally view a ``real-world'' debate: an exercise in rhetoric a la competitive debating rather than a true attempt at decision-making. In general though, it is good advice to remember that debates never really changed anyone's minds---most people go into them with a specific mindset already, and are usually there aching for a verbal fight, not to have their minds changed.

- Anonymous can be a horrible, senseless, uncaring monster. Well, it comes from being unidentified with a real-world person---the repercussions of any action that Anonymous takes is limited to whatever handle it was that made the said action. Even the personality associated with that handle leaves unscathed no matter the outcome.

- Anonymous is still able to deliver. Comes from rule 4---with a legion, it is hard for Anonymous to not deliver. Unless there are some really strong forces keeping them at bay (like a well-funded intelligence agency), and even then, some of Anonymous might just make it through regardless, just to show that they can (and fulfill rule 9).

- There are no real rules about posting. Apart from the protocols that are used to send the bits all over the Internet, all other interaction rules are organically driven anyway. This is, sadly enough, true about meatspace.

- There are no real rules about moderation either---enjoy your ban. Same concept as in rule 10, except this is about personalities with power. In meatspace, we have laws (and their associated enforcement) to restrict those whom we invest power in to ensure that the power that is allowed isn't misused (even though it does get misused anyway, but if it is legal at that point of time, it's still legit). On the Internet, there is no such thing---Might Is Right holds. In many ways, this is really a natural law in action. In the Social Media Age, the false equivalence of online personality and meatspace person has lulled participants into believing that meatspace rules apply, and so they get all angry when they seemingly get banned for ``no reason'', and then they make a stink of how it is going against their constitutional rights and what-not. I can understand their frustration---it literally comes about because of the false equivalence. The solution then is just to play nice... it is someone else's walled garden one's playing in after all.

- Anything you say can and will be used against you. Moreso on the Internet because that is usually all anyone sees. Sure, you can be dumb enough to upload a video of yourself defending your words and what-not thinking that it would do any good, but all that you've done is just to give Anonymous more things to use against you, specifically ad hominem ones on your appearance.

- Anything you say can and will be turned into something else. This used to be bad in the old days, but now, thanks to the proliferation of videos (which provide nice appearance and sound samples), this is more insidious. Have fun getting your meatspace reputation smeared in mud for that one-time indiscretion.

- Do not argue with trolls---it means they win. Most folks on the Internet aren't there to cause trouble---they just want to seek new information/perspectives. But there are a select few who get all excited to piss people off---they are the notorious trolls. Trolls are everywhere really---in meatspace we tend to call them ``assholes'' or ``dickheads'' or ``CB-kia''. They have no shame, and they don't care about reasoning. They are there for the fight, real or otherwise. ``Karen''-type behaviour can be seen as a contemporary meatspace equivalent of a troll. Any form of engagement immediately leads to a losing position---it then just becomes a question of how much one loses. Take rule 14's advice: do not argue with trolls.

- The harder you try, the harder you will fail. Oof. This one hits hard in the Social Media Age. All that stupid trendy bullshit copycat TikTok videos nonsense? Yeah... we saw all that in text form aeons ago on the Internet. At least failing back then could be insulated through the use of disposable personalities. Now, with one's bloody face associated with it, and the false equivalence of personality and person kicking in, combined with The Algorithm of the corporations running the social media, good luck in redeeming from your failure. My advice? If you're gonna do things on the Internet, at least do something that you are interested in, and not because you want to follow a trend. The more you want to follow a trend, the harder you are trying, and then rule 15 kicks in. Oh I nearly forgot... the trendy word for rule 15 today is ``cringe''.

- If you fail in epic proportions, it may just become a winning failure. Ever saw a movie so bad that it was good? Rule 16 encapsulates this point. And I suppose it is an inspiration of sorts for the try-hard behaviour that rule 15 captures. ``Failing in epic proportions'' isn't a high-probability thing---you are more likely to just fail normally instead. It reminds me of an apocryphal story about how a professor was giving a true/false-type examination, stating that if anyone could get all the questions wrong (i.e. an epic failure), they would get full-marks for that exam (i.e. a winning failure). The catch though was that if one did not get all the questions wrong, then the number that was correct would be the final score instead. Cast in those terms, it becomes more obvious that one should probably not try one's luck to get rule 16.

- Every win fails eventually. Trends come, trends go. Anyone still remember grumpy cat? Or numa numa? Or even ``coffin dance''? Yeah, me neither.

- Everything that can be labelled can be hated. Oh this one is a fun one. We say that humans beat other animals because we developed writing that allows us to learn stuff outside of personally experiencing it. That's only half-true: writing as an ability is useless if there isn't some kind of information compression that allows us to quickly and effortlessly refer to an entire body of knowledge with a simpler symbol to build up the necessary amount of detail. That is what we mean by ``label''. This is the strategy that ``woke'' culture uses mercilessly---they figured out how to label different groups of people whose behaviour is mainstream that they don't like, and from there, they make it super easy to identify and then lambast them. I mean, meatspace has tons of such examples too: ``radical left'', ``communists'', ``capitalist pigs'', and so on are such examples. They all come from a practical implementation of othering. We can't easily define the in-group through positive identifiers, so maybe we can define the in-group by defining the out-group of those we don't like, and then apply the ``if you have any characteristics of the out-group, you are not part of the in-group''. And from there, hate can flow.

- The more you hate it, the stronger it gets. Oof... another psycho-social realism. When the out-group gets increasing amounts of hate for being in the out-group, they can start embracing their out-group label and then become their own band and rallying call before pushing back, hard. Contemporously, just look at the whole anti-vaxxer movement, the awkwardness that is the US Republican Party, or even the libertarian tendencies of some US Democratic Party folks. It's all about pushing back against what was pushed to them. Rule 19 provides a solution in some sense in dealing with groups that one doesn't like [on the Internet]---don't go round provoking them.

- Nothing is to be taken seriously. Remember rules 3, 4, and 7? When personalities with no meatspace stake interact, the interaction shouldn't carry the kind of weight of seriousness that one would expect. Take it easy man... just because hunter2_69420 called you a bad name doesn't mean that you are so and should take it seriously---you don't even know if hunter2_69420 is human or not. This is true even under the false equivalence of personality and person that is in social media. ``Dems fighting words'' only works when you and that person is in meatspace, and under those conditions, there are enough social cues to show just how serious things are. On the Internet, what social cues?

- Pictures or it didn't happen. Everyone can say what they want, but they've got to back it up with their own evidence to show that it is true. So, if someone claims that the Earth is flat, that person needs to supply the evidence showing that the Earth is indeed flat, and it is not up to someone else to show that the Earth is not flat. The burden of proof should always be on the proclaimer, but always remember rule 7.

- Original content is original only for a few seconds before it's no longer original. Every post is always a repost of a repost. Ah the old classic. Nothing remains original for long on the Internet in the same way nothing remains original for long in meatspace. The only difference between the two is that duration aspect: meatspace duplication of ideas might take longer due to the need to actually replicate things that need time to happen (think chemical processes, mechanical processes and what-not), whereas replication on the Internet is just a simple duplication of bits. If one sees something, there is a very high chance that it was a reposted item. This is especially true when considering memetic material from ``groups'' in the curated social media. These ``groups'' exist for the purpose of gaming some metrics of engagement, and their way of doing it is to aggregate popular material. Except rule 15 precludes them from creating their own popular material, and so they just rely on low-effort reposting of reposts, often times with just enough effort to further obfuscate the origins of the item. And this is why, my friends, if you want to do something original and publish it [on the Internet], don't go in with the mentality of wanting to retain ownership, it won't work that way... see also rule 62. You are better off either going with creative commons or some other open-source type license. If retaining ownership is that important, consider doing something that is more physical in nature, something that is a little bit harder to replicate, and more importantly, where you can apply meatspace law to enforce that ownership. Even then, rule 62 guarantees that with all the effort thrown in, all you are doing is just buying some short time between when you are holding ownership and when it goes into the Internet as a ``free'' thing.

- On the Internet men are men, women are also men, and kids are undercover FBI agents. Oh, this might be controversial to the PC crowd. Yes, it's misogynistic, but hey, it's the first mover advantage. If anything, this rule tells us to not bring in gender and age into any Internet discussion, because remember rule 18. If one comes in and begins with ``as a 32-year-old ciswoman...'', you are not empowering yourself in any way, you are just making it easier for people to commence ad hominem, not to mention the effects of rule 7, 12, 13, and 14. Go in as a faceless personality and ``enjoy'' what the Internet has to offer instead of trying to force your agenda. Changing the world involves fixing it from the meatspace, not in a place where nothing is taken seriously (rule 20). Does that require more effort than angry-posting in whatever part of the Internet one lurks? Absolutely, but usually the things that matter will require more effort to get them anyway---get used to it. Use the Internet to organise by any means, but don't think that the Internet alone is sufficient to get real change done.

- Girls do not exist on the Internet. See my comment rule 29, and rules 12 and 13.

- You must have pictures to prove your statements; anything can be explained with a picture. See my comment on rule 21.

- Lurk more---it's never enough. Ooo... this one is spicy. In the Social Media Age where ``engagement'' is highly valued, there has been a lot of effort in making sure that people interact as soon as possible instead of allowing them to lurk. This naturally increases the friction among them because the existing interaction dynamic (I'll call it ``culture'') gets challenged by the newcomers. The existing population is angered at the threatened peace from the upstarts, and the newcomers are angered at how the existing population are not seeing the ``right way'' of doing things. The key issue here is that unlike meatspace, social cues hardly exist on the Internet. The closest to social cues is through the culture inferred from the words that are exchanged within the group. Those who are already in the group are a part of the culture, while those who are new are not---it then behooves the newcomer to learn the culture proper before participating actively. In meatspace, we often put newcomers in their place very quickly due to the myriad of social cues that trigger strong visceral reactions (if one is really out of line, the ``air'' can be sensed to have ``gone bad'' very quickly). No such thing exists on the Internet, whether the false equivalence of personality and person exists or not. In fact, I would say that the false equivalence of personality and person is one of the contributing factors to the lack of lurking---that false equivalence falsely empowers the person when they enter a new online group as they believe that their personality carries forward into all the groups they are in. It's like that stereotypical shouting person at the counter going ``do you know who I am?!'' at the poor customer service representative. Well, this is a new/different social group, we don't know who you are; conversely, we do know our group, and we also know that you are out of line. Since social cues don't exist on the Internet, what usually happens is a backlashed smackdown that just escalates. See also rule 10, 11, 12, and maybe 18.

- If it exists, there is porn of it. No exceptions. Yeah. People are weird with what gets them off (see rule 36). The only difference is that with the Internet, physically impossible fantasies are not completely impossible.

- If there is no porn of it, porn will be made of it. Yeahh... see rule 34.

- No matter what it is, it is somebody's fetish. Just remember what fetish means.

- No matter how fucked up it is, there is always worse than what you just saw. ``Fucked up'' can mean many things, but just remember that the depths of human depravity can go really deep. Just remember that the largest advances in technology usually come from people thinking about how to hurt/maim/kill others more efficiently/effectively.

- No real limits of any kind apply here---not even the sky. See also rule 10, 11, and 20.

- CAPS LOCK IS CRUISE CONTROL FOR COOL IT'S REALLY SHOUTING, BUT SHOUTING IS COOL EVEN IN MEATSPACE.

- EVEN WITH CRUISE CONTROL YOU STILL HAVE TO STEER MAKE SURE THAT WHILE YOU ARE SHOUTING, YOU ARE NOT SHOUTING COMPLETE GIBBERISH!

- Desu isn't funny. Seriously guys. It's worse than Chuck Norris jokes. This rule is quite dated these days. I don't think anyone remembers ``desu''. Think of it as a specific interpretation of rule 17.

- Nothing is Sacred. A different emphasis of rule 37, 38, and maybe even rule 8.

- The more beautiful and pure a thing is---the more satisfying it is to corrupt it. It's like cultivating bonsai... it takes time and effort. And naturally, the greater the effect, the more satisfying it gets. Thanks to rule 37, 38, and 42, the Internet is more of a corrupting influence than a wholesome one anyway---why do you think God is pissed at human sin?

- If it exists, there is a version of it for your fandom... and it has a wiki and possibly a tabletop version with a theme song performed by a Vocaloid. Okay, let's break this down. A fandom is a community of fans of something (let's call it by the metavariable ``Abraxis'' for ease of reference). These fans usually like to mashup Abraxis with other currently popular thing to derive fun/pleasure (potential application of rule 36, or 43 depending on context). Thanks to the existence of Fandom/Wikia, hosting a knowledge-base of any sort is easy (think Wiki). The tabletop version, theme song performed by a vocaloid parts are all part of the mash-up that I referred to. Note also that rule 44 isn't just applying to Abraxis... it is referring to derived works of Abraxis as well. So if Abraxis was originally a novel, there might be a game derived from it, and that game will have its own Wiki, tabletop version, theme song sung by a Vocaloid and what-not.

- If there is not, there will be. It's the induction rule as applied to rule 44.

- The Internet is SERIOUS FUCKING BUSINESS. This sounds antithetical to rule 20, but it is more of an ironic statement. I mean, consider rule 20, and then contemplate on the fact that there even exists this list of rules of the Internet. Head broken yet? So yes, there are people who take the Internet too seriously to the point that it has spillover effects into meatspace (or otherwise really), but it is important to remember that the Internet is only important in itself, and is important as a potential communications channel for meatspace things. It should not be seen as a replacement of meatspace nor can it be manhandled like in meatspace.

- The only good hentai is Yuri, that's how the Internet works. Only exception may be Vanilla. Um, anime girls/women are usually drawn much better than anime boys/men. Also the predominance of ``men'' on the Internet (see rule 29, and 30).

- The pool is always closed. An old, old meme of a coordinated denial of service from yesteryear forever remembered as this rule. Still, remember rule 6.

- You cannot divide by zero (just because the calculator says so). Another old meme.

- A Crossover, no matter how improbable, will eventually happen in Fan Art, Fan Fiction, or official release material, often through fanfiction of it. In many ways, fan fiction/art are just one of the many ways fans of Abraxis (see rule 44) choose to be active participants in the appreciation of Abraxis itself. And when someone is a fan of multiple Abraxis? It will be inevitable that they will play the ``what-if'' scenarios when their favourite character of Abraxis 1 meets their favourite character of Abraxis 2. The more talented will then make the art, or fiction, or whatever, and thus rule 50 will be validated.

- Chuck Norris is the exception, no exceptions. Self-referential ironic statements are a fact of life on the Internet. Don't worry too much about it.

- It has been cracked and pirated. You can find anything if you look long enough. Sadly, this is true. If your main skill is in things that can easily be cracked/pirated, perhaps consider how to incorporate aspects that can make you some money while being resistant to being cracked/pirated. Physical merchandise is a thing for creative works, while blocking functionality behind the Software as a Service is another. Having a donation account for direct monetary contribution is also a good way to getting support/remuneration without necessarily having to resort to ``evil'' ways too. Just remember that unlike what corporations like to say, a pirated/cracked copy isn't usually a ``lost sale''---it was never really a sale to begin with. Tolerating some amount of piracy can actually turn out well, because it can create a de facto standard instead (remember the days of Photoshop before it got all to the cloud?).

- For every given male character, there is a female version of that character (and vice-versa). And there is always porn of that character. A specific instance of rules 34, 35, 44, 50, and maybe even 43).

- If it exists, there's an AU of it. Yeah, it's like rule 50, except the crossover is with itself. Sometimes Abraxis develops a certain [unnatural] way because of external constraints like time/money, and if the fandom is unusually vocal, they might just come up with their own interpretations of things.

- If there isn't, there will be. See my comments in rule 64.

- Everything has a fandom, everything. Related to rules 36, 44, maybe 46, 50).

- 90% of fanfiction is the stuff of nightmares. A specific implementation of rules 36, 37, 42, and maybe 43. There could be more, but damn this post is bloody long.

- If a song exists, there's a Megalovania version of it. As noted before, this is too new for me to comment on.

- The Internet makes you stupid. Yes it does. I just spent a couple of hours commenting on the rules of the Internet, didn't I? And didn't you just spend some time reading/skimming through this too, yes? Not to mention the more serious problem of misinformation because people spend so much time on the Internet and falsely equating the correctness of a statement with whether one personally trusts the person who forwarded that statement to them, as opposed to evaluating the statement's truth via the veracity of the supporting evidence and relevant expertise of the person making the statement.

Till the next update.

Monday, October 25, 2021

Math?

Man, is it truly Monday? It's starting to get increasingly harder to tell which day of the week it is these days.

I worked on a little bit of math to figure out how to define ellipses for use with the CIRCLE statement to show the ``shield'' for LED2-20. It was not particularly hard, but it did trigger me towards thinking about how I can pre-compute the parameterisation at hybrid-bitmap generation time so that I can just load it all into memory and use it accordingly. I also started to work on some line segment-ellipse intersection math, but it was hairy algebraically and I didn't get too far. That last part was to be used for collision detection also, and it made me think about the best way to structure my data to ensure that the collision detection process isn't too bad.

I was also thinking about numerical scaling needed for the various features of the player character and how best to represent them in the gauge form. Right now I am veering towards a gauge that is linear when short, and logarithmic beyond a certain point to handle the potentially large dynamic range (I'm thinking of an order of magnitude difference of about 6 or more from end to end).

In short, lots of thinking about implementation details of LED2-20.

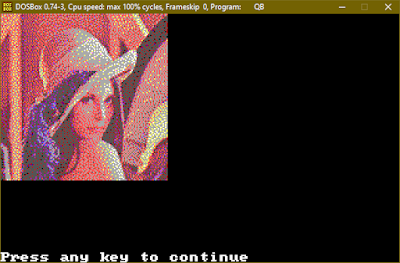

Incidentally, I've also discovered DOSBox-X, a fork of DOSBox that aims to be a more complete emulator for PC hardware earlier than the Pentium II era. It claims more features than the old DOSBox, but the one feature that I found most useful was the ability to send Ctrl-Brk to the underlying program---this is the main way of telling QuickBASIC to pause execution and go back to the IDE. DOSBox cannot do that for a whole bunch of reasons, and it is unlikely that it will be fixed at this stage to do so since this key-command is more likely to show up in doing development work in DOS than in playing a game. The alternative is to use Ctrl-Scroll Lock, but funny enough, Eileen-II does not have a Scroll Lock key(!).

Apart from that singular feature though, everything else that DOSBox-X offers is not as interesting nor needed, and so I'm just going to use regular DOSBox as originally planned. Actually, there is one issue in DOSBox-X that actively discourages me from using it---when changing video modes, it has a rather unhealthy amount of delay, even on maximised emulation speed settings. I don't remember old hardware ever demonstrated that problem... except maybe for some Matrox displays from back in the day. Not sure why, not bothered enough to figure out when I have a working alternative.

I also played a few rounds of Jupiter Hell, trying to get a 100% kill on Angel of Carnage on Ultra-Violence. Man, those Medusas hit real hard. I was doing well till I faced them. To be fair, that was also the first time I faced those enemies, and at that point, I was doing heavy damage with my modded rocket launcher. It seems to me that my play-style for Jupiter Hell has been very weapon-restricted in general, favouring pistols/shotguns, or just the rocket launcher in this challenge mode. Maybe I should do a ``sight-seeing'' tour with a more conventional automatic weapon-type build instead just for fun.

That's about all I want to write about today. Till the next update then.

I worked on a little bit of math to figure out how to define ellipses for use with the CIRCLE statement to show the ``shield'' for LED2-20. It was not particularly hard, but it did trigger me towards thinking about how I can pre-compute the parameterisation at hybrid-bitmap generation time so that I can just load it all into memory and use it accordingly. I also started to work on some line segment-ellipse intersection math, but it was hairy algebraically and I didn't get too far. That last part was to be used for collision detection also, and it made me think about the best way to structure my data to ensure that the collision detection process isn't too bad.

I was also thinking about numerical scaling needed for the various features of the player character and how best to represent them in the gauge form. Right now I am veering towards a gauge that is linear when short, and logarithmic beyond a certain point to handle the potentially large dynamic range (I'm thinking of an order of magnitude difference of about 6 or more from end to end).

In short, lots of thinking about implementation details of LED2-20.

Incidentally, I've also discovered DOSBox-X, a fork of DOSBox that aims to be a more complete emulator for PC hardware earlier than the Pentium II era. It claims more features than the old DOSBox, but the one feature that I found most useful was the ability to send Ctrl-Brk to the underlying program---this is the main way of telling QuickBASIC to pause execution and go back to the IDE. DOSBox cannot do that for a whole bunch of reasons, and it is unlikely that it will be fixed at this stage to do so since this key-command is more likely to show up in doing development work in DOS than in playing a game. The alternative is to use Ctrl-Scroll Lock, but funny enough, Eileen-II does not have a Scroll Lock key(!).

Apart from that singular feature though, everything else that DOSBox-X offers is not as interesting nor needed, and so I'm just going to use regular DOSBox as originally planned. Actually, there is one issue in DOSBox-X that actively discourages me from using it---when changing video modes, it has a rather unhealthy amount of delay, even on maximised emulation speed settings. I don't remember old hardware ever demonstrated that problem... except maybe for some Matrox displays from back in the day. Not sure why, not bothered enough to figure out when I have a working alternative.

I also played a few rounds of Jupiter Hell, trying to get a 100% kill on Angel of Carnage on Ultra-Violence. Man, those Medusas hit real hard. I was doing well till I faced them. To be fair, that was also the first time I faced those enemies, and at that point, I was doing heavy damage with my modded rocket launcher. It seems to me that my play-style for Jupiter Hell has been very weapon-restricted in general, favouring pistols/shotguns, or just the rocket launcher in this challenge mode. Maybe I should do a ``sight-seeing'' tour with a more conventional automatic weapon-type build instead just for fun.

That's about all I want to write about today. Till the next update then.

Sunday, October 24, 2021

My Cracked Finger-tip Skin Hurts

Today's a day that was spent in reading. I finished The Abyss by Orson Scott Card. The Abyss is the novel version of movie of the same name written by the author of the Ender series. It's worth a read---the characters are well fleshed out, and the scenes were as well fleshed out as they were in the movie itself.

Apart from the reading, I didn't do much though. Hmm... I mean, failed runs of Jupiter Hell notwithstanding. I was trying to get a Gold Badge challenge of completing Angel of Shotgunnery on Hard difficulty for quite a while, but I just didn't quite get the right tempo. So I've been switching over to Angel of Carnage on Ultra-Violence instead. I made slightly deeper progress on the latter than on the former.

I suppose part of the reason why I didn't seem to have done anything active on the PC or otherwise was the cracks in the finger tip skin for both of my middle fingers. I don't know how nor why they decided to occur---but I do know that they they hurt like hell when using them in typing, since I my fingers actually press the keys straight down via the finger tips, as opposed to being flat. And I can tell you, those cracks in the skin... they are nasty. Mere contact with the key surface is enough to increase the level of irritation through pain, then when the typing goes on, it just breaks any tempo that is available. And need I mention that they keys that they are used on (``edcik,'') are some of the most used keys that one can have? And when they start bleeding... my keyboard starts becoming really unsightly and horrible to use.

Needless to say, I can't play any woodwind instruments either since they need the finger tips to work well.

Anyway, that's all I can write for now. Till the next update.

Apart from the reading, I didn't do much though. Hmm... I mean, failed runs of Jupiter Hell notwithstanding. I was trying to get a Gold Badge challenge of completing Angel of Shotgunnery on Hard difficulty for quite a while, but I just didn't quite get the right tempo. So I've been switching over to Angel of Carnage on Ultra-Violence instead. I made slightly deeper progress on the latter than on the former.

I suppose part of the reason why I didn't seem to have done anything active on the PC or otherwise was the cracks in the finger tip skin for both of my middle fingers. I don't know how nor why they decided to occur---but I do know that they they hurt like hell when using them in typing, since I my fingers actually press the keys straight down via the finger tips, as opposed to being flat. And I can tell you, those cracks in the skin... they are nasty. Mere contact with the key surface is enough to increase the level of irritation through pain, then when the typing goes on, it just breaks any tempo that is available. And need I mention that they keys that they are used on (``edcik,'') are some of the most used keys that one can have? And when they start bleeding... my keyboard starts becoming really unsightly and horrible to use.

Needless to say, I can't play any woodwind instruments either since they need the finger tips to work well.

Anyway, that's all I can write for now. Till the next update.

Saturday, October 23, 2021

Multipolarities

It's been a slow day today. I spent some time reading the ESV Study Bible for Luke and Genesis, a chapter of An Ethic for Christians and Other Aliens in a Strange Land by William Stringfellow, and started in The Abyss by Orson Scott Card. I also watched a couple of Hololive Production videos, played quite a few failed runs in Jupiter Hell, and started on game object structure definitions in LED2-20 in preparation for adding in the game sprite renderer.

I also went to church.

That's about it. Till the next update.

I also went to church.

That's about it. Till the next update.

Friday, October 22, 2021

Manipulating Draw Order for Better Performance Through Amortisation

I'll give a quick update and call today's entry a day because frankly, I'm not feeling too good in the head. Too many dark thoughts recently.

Anyway, I talked about getting parallax background movement to work yesterday and said that the speed was acceptable.

Well, it was acceptable in the stochastic sense. In reality, there were big dips in the processing rate ever so often even while using the PCOPY caching technique that came from the increase in workload needed to dump a whole bunch of large (relative to the screen) bitmaps at once whenever the background had to be shifted by a pixel due to the parallaxed movement. This was unacceptable for the simple reason that it was affecting the output animation in a very obvious way.

20 years ago me would have raised my hands and go ``well I don't know how to solve it''. The me of today just shrugged at 1am and said ``meh, let's use another screen page (we have 6 spare not counting the two that we are using for the page-flipping animation technique) to incrementally dump the 21 vertical slices of the background for the next pixel shift, and then just copy it over to the original page for the full background, filling in the missing slices that we didn't manage to do before''. And that was what I did.

It wasn't too hard to implement it---I just added a new variable amortisedXtile that kept track of which of the 21 slices I have drawn so far. Then, I shoved some variant of a conditional on amortisedXtile that would do the relevant PUT as needed. So, instead of paying 21×C all at once to draw the entire background, I just pay C slowly over 21 different rendering cycles to spread the cost around. It worked superlatively well in reducing those dips in processing rate.

One last thing I did to further amortise the cost of the parallax background update was to apply the same set up towards the shifting of the vertical slices by one tile when the left-most had fallen out of the screen. In a modern set-up, this would be trivial---I just needed to maintain [say] a singly-linked list of pointers to the bitmaps in memory, and just append a new one to the end while dropping off the first. Except in QuickBASIC, there isn't such a thing as ``pointers''. No, I can't even make use of the 20-bit physical address thing I mentioned before because I was using the built-in GET/PUT statements for these. Those are faster than whatever equivalent that I did in QuickBASIC, unless I decided to go down the Dark Arts Path of using pure assembly (recall that I've disallowed myself the use of that). If I wanted the array representing slice 1 to now represent slice 2, I would have to copy the contents of slice 2 back into slice 1. Sadly, the GET graphics statement works an order of magnitude faster than a FOR-loop that I could code in QuickBASIC.

So with all that amortised things added in, the parallax background renderer is much more efficient than before. All these are predicated on having more than 21 frames of activity before the time for pixel shift though, which is something I can control somewhat through my choice of the overall width of the background ``skybox'' image and the overall width of the entire level.

I also got the explosion rendering pipeline to work correctly, and wrote a basic pixel-collision function between the masks of two sprites. That is not sufficient for true pixel-perfect collision detection though---we need to take into account the intermediate motion between the start and end of the trajectory of the two sprites as well. Otherwise we will have the age-old problem where a sprite moves so fast that it completely bypasses collision altogether. That's a different math problem for a different day, but it will get done.

The final thing that I did was to add a basic gauge-bar renderer. The gauge-bar renderer is similar to say the life bar of some modern games, where if the number reduces, the difference between the original number and the current number is shown fleetingly with a mini-bar of a different colour. The renderer can do that now, but it cannot do it in a ``smoothly animating manner'' just yet. I'm not even sure if I want to do it, since it will require more sprite elements to keep track of, but we'll see.

I'm also thinking about incorporating hit-boxes in lieu of just using the mask to define collision. This would allow ``boss'' sprites to be more challenging to tackle. But we'll see.

Head isn't doing too well after being rudely woken up this morning by some incessant banging. Till the next update.

Anyway, I talked about getting parallax background movement to work yesterday and said that the speed was acceptable.

Well, it was acceptable in the stochastic sense. In reality, there were big dips in the processing rate ever so often even while using the PCOPY caching technique that came from the increase in workload needed to dump a whole bunch of large (relative to the screen) bitmaps at once whenever the background had to be shifted by a pixel due to the parallaxed movement. This was unacceptable for the simple reason that it was affecting the output animation in a very obvious way.

20 years ago me would have raised my hands and go ``well I don't know how to solve it''. The me of today just shrugged at 1am and said ``meh, let's use another screen page (we have 6 spare not counting the two that we are using for the page-flipping animation technique) to incrementally dump the 21 vertical slices of the background for the next pixel shift, and then just copy it over to the original page for the full background, filling in the missing slices that we didn't manage to do before''. And that was what I did.

It wasn't too hard to implement it---I just added a new variable amortisedXtile that kept track of which of the 21 slices I have drawn so far. Then, I shoved some variant of a conditional on amortisedXtile that would do the relevant PUT as needed. So, instead of paying 21×C all at once to draw the entire background, I just pay C slowly over 21 different rendering cycles to spread the cost around. It worked superlatively well in reducing those dips in processing rate.

One last thing I did to further amortise the cost of the parallax background update was to apply the same set up towards the shifting of the vertical slices by one tile when the left-most had fallen out of the screen. In a modern set-up, this would be trivial---I just needed to maintain [say] a singly-linked list of pointers to the bitmaps in memory, and just append a new one to the end while dropping off the first. Except in QuickBASIC, there isn't such a thing as ``pointers''. No, I can't even make use of the 20-bit physical address thing I mentioned before because I was using the built-in GET/PUT statements for these. Those are faster than whatever equivalent that I did in QuickBASIC, unless I decided to go down the Dark Arts Path of using pure assembly (recall that I've disallowed myself the use of that). If I wanted the array representing slice 1 to now represent slice 2, I would have to copy the contents of slice 2 back into slice 1. Sadly, the GET graphics statement works an order of magnitude faster than a FOR-loop that I could code in QuickBASIC.

So with all that amortised things added in, the parallax background renderer is much more efficient than before. All these are predicated on having more than 21 frames of activity before the time for pixel shift though, which is something I can control somewhat through my choice of the overall width of the background ``skybox'' image and the overall width of the entire level.

I also got the explosion rendering pipeline to work correctly, and wrote a basic pixel-collision function between the masks of two sprites. That is not sufficient for true pixel-perfect collision detection though---we need to take into account the intermediate motion between the start and end of the trajectory of the two sprites as well. Otherwise we will have the age-old problem where a sprite moves so fast that it completely bypasses collision altogether. That's a different math problem for a different day, but it will get done.

The final thing that I did was to add a basic gauge-bar renderer. The gauge-bar renderer is similar to say the life bar of some modern games, where if the number reduces, the difference between the original number and the current number is shown fleetingly with a mini-bar of a different colour. The renderer can do that now, but it cannot do it in a ``smoothly animating manner'' just yet. I'm not even sure if I want to do it, since it will require more sprite elements to keep track of, but we'll see.

I'm also thinking about incorporating hit-boxes in lieu of just using the mask to define collision. This would allow ``boss'' sprites to be more challenging to tackle. But we'll see.

Head isn't doing too well after being rudely woken up this morning by some incessant banging. Till the next update.

Thursday, October 21, 2021

Minor Update

Here's a short one. I've gotten the parallax background working in LED2-20. I had to ditch the vertical parallax due to what seems to be a general slow down due to too many FUNCTION/SUB calls. I kept the vertical slices idea, but used a code generator in Python3 that would only call the fancier GaPutBitmap() SUB on the leftmost and rightmost slices, relying on the direct call to the faster PUT statement to draw them. In addition, I ended up using an additional screen page to cache the background to PCOPY over to the page under active rendering to further reduce overhead from drawing each slice.

It does make me worried about how terrain tiles would work though, but we'll deal with it later.

Scrolling the background using on-the-fly loading from the FILEPAK for the needed slice works well now, and the speed is acceptable.

Now I'm working on the explosions part. We'll see how it goes.

Till the next update.

It does make me worried about how terrain tiles would work though, but we'll deal with it later.

Scrolling the background using on-the-fly loading from the FILEPAK for the needed slice works well now, and the speed is acceptable.

Now I'm working on the explosions part. We'll see how it goes.

Till the next update.

Wednesday, October 20, 2021

Minute Control Over Dynamics

My new alto recorder came in today. As stated in yesterday's entry, it is the Yamaha YRA-302BIII.

As expected, it definitely gave me much finer control over the tone, with soft dynamics working much better than my previous Yamaha YRA-28BIII. I also did my usual thing of weighing it, and it weighed in at 196.0±0.1 g, compared to the 211.1±0.1 g that is the YRA-28BIII. So, despite it having less mass, it plays much better, which suggests that for woodwind instruments, geometry plays the larger role of determining the quality of the tone that comes out of it.

That said though, I really cannot bring myself to getting a wood version of the recorder, or of any instrument that I don't play as often as my dizi. While wood and its allies are traditional woodwind instrument materials, their dimensional stability has always been something to worry greatly overr. Unlike sufficiently dense plastic and metal, wood will change its shape due to the combined effects of both heat and humidity. The geometry of woodwind instruments' air column bore is extremely sensitive to these minute changes, so they really require more care to ensure that their timbre does not get overly affected by it.

``But MT, you play the dizi---it's wood-like. Isn't that contradictory?''

Ah, but I play the dizi more often than I play some of my other alternate instruments, and so I am more aware of the changes when they occur. Also, unlike the western instruments, the dizi does not have as much ``work'' done on them that can exacerbate any of these ambient environment issues. What I mean is, dizi do not have screw holes to mount posts on (compare with piccolo, and clarinet), nor do they have mixed woods of different behaviour under the same ambient conditions (compare with the recorder where the block is often made of a different wood compared to the rest of the instrument). They do have a stopper that used to be cork and thus potentially problematic, but most modern-made dizi use a synthetic plastic-rubber one instead. The joint separating the head joint from the body is another potential source of issues, but it tends to be problematic only if is sufficiently cold and dry.

So, for instruments that I don't play as often, I'm more likely to look for something that is more consistent in its dimensions. And high density plastics tend to fit this role very well. Sure, it might lose out a little on the visual aesthetics, but come on, it's a musical instrument---how it sounds is more important than how it looks. And since these instruments aren't played as often as my dizi, cost can become a factor to consider too. The cost we are talking about is more than just the initial sunk cost of purchase---it also includes the upkeep cost as well. A hard-wearing high density plastic music instrument built with the right geometry can go pretty far without much upkeep beyond the basics, and that is usually good enough.

I'm up to p500/803 of Mathematics for Engineers (2nd Edition), and despite the author's name of Dull, is anything but. I might want to take a break from reading tomorrow and just play something, either a first person shooter like Serious Sam 3: BFE, or restarting Cthulhu Saves the World; I don't think that I have actually completed that game. I do remember that I liked the humour in it though.

Maybe I'll work a little on LED2-20 on getting that parallax background working. I am starting to think that splitting into vertical strips isn't sufficient... parallax scrolling should also include some minor up/down movements as well, which means that the far background should have dimensions that are both wider and higher than the width and height of the screen respectively. My current partial bitmap code limits the bitmaps in place to being no more than 320×200, which was why I decided to decompose the background image into vertical strips. But needing this vertical parallax as well makes me think that maybe I need to further sub-divide each vertical strip into two to ensure that I don't break anything.

Eh, we'll see.

That's all I want to write for now. Till the next update then.

As expected, it definitely gave me much finer control over the tone, with soft dynamics working much better than my previous Yamaha YRA-28BIII. I also did my usual thing of weighing it, and it weighed in at 196.0±0.1 g, compared to the 211.1±0.1 g that is the YRA-28BIII. So, despite it having less mass, it plays much better, which suggests that for woodwind instruments, geometry plays the larger role of determining the quality of the tone that comes out of it.

That said though, I really cannot bring myself to getting a wood version of the recorder, or of any instrument that I don't play as often as my dizi. While wood and its allies are traditional woodwind instrument materials, their dimensional stability has always been something to worry greatly overr. Unlike sufficiently dense plastic and metal, wood will change its shape due to the combined effects of both heat and humidity. The geometry of woodwind instruments' air column bore is extremely sensitive to these minute changes, so they really require more care to ensure that their timbre does not get overly affected by it.

``But MT, you play the dizi---it's wood-like. Isn't that contradictory?''

Ah, but I play the dizi more often than I play some of my other alternate instruments, and so I am more aware of the changes when they occur. Also, unlike the western instruments, the dizi does not have as much ``work'' done on them that can exacerbate any of these ambient environment issues. What I mean is, dizi do not have screw holes to mount posts on (compare with piccolo, and clarinet), nor do they have mixed woods of different behaviour under the same ambient conditions (compare with the recorder where the block is often made of a different wood compared to the rest of the instrument). They do have a stopper that used to be cork and thus potentially problematic, but most modern-made dizi use a synthetic plastic-rubber one instead. The joint separating the head joint from the body is another potential source of issues, but it tends to be problematic only if is sufficiently cold and dry.

So, for instruments that I don't play as often, I'm more likely to look for something that is more consistent in its dimensions. And high density plastics tend to fit this role very well. Sure, it might lose out a little on the visual aesthetics, but come on, it's a musical instrument---how it sounds is more important than how it looks. And since these instruments aren't played as often as my dizi, cost can become a factor to consider too. The cost we are talking about is more than just the initial sunk cost of purchase---it also includes the upkeep cost as well. A hard-wearing high density plastic music instrument built with the right geometry can go pretty far without much upkeep beyond the basics, and that is usually good enough.

I'm up to p500/803 of Mathematics for Engineers (2nd Edition), and despite the author's name of Dull, is anything but. I might want to take a break from reading tomorrow and just play something, either a first person shooter like Serious Sam 3: BFE, or restarting Cthulhu Saves the World; I don't think that I have actually completed that game. I do remember that I liked the humour in it though.

Maybe I'll work a little on LED2-20 on getting that parallax background working. I am starting to think that splitting into vertical strips isn't sufficient... parallax scrolling should also include some minor up/down movements as well, which means that the far background should have dimensions that are both wider and higher than the width and height of the screen respectively. My current partial bitmap code limits the bitmaps in place to being no more than 320×200, which was why I decided to decompose the background image into vertical strips. But needing this vertical parallax as well makes me think that maybe I need to further sub-divide each vertical strip into two to ensure that I don't break anything.

Eh, we'll see.

That's all I want to write for now. Till the next update then.

Tuesday, October 19, 2021

Manual for Recorder Fingering Patterns

I find myself getting more interested in recorders. They are actually more interesting than what our school day mandatory lessons might suggest because they actually have a pretty wide range nearly 3 octaves (kinda like a concert flute), though the more standard range is closer to being 2+ octaves (2 octaves and a minor second). This is very close to that of the dizi actually---dizi has a standard range of 2 octaves and a second, with an option to go up to 2 octaves and a fourth. There are some subtle differences still of course, since in terms of correspondence of fingering patterns, the recorder's bell tone starts one major second lower than the dizi, and while the recorder does overblow in octaves, the addition of the thumb hole on the recorder means that the lower half of the second instrument octave has some funky fingering patterns to begin with.

Getting to the upper half of the second instrument octave on the recorder is super funky. They use a lot of variations from the ``standard'' simple flute fingering pattern of XXO-XXO to choose the higher harmonics accordingly to get the right pitch (see this old post for examples of the fingering). It is hard to do that on a 6-hole dizi for two reasons: the lack of a thumb hole makes selecting that higher harmonic through forcing an anti-node from pure embouchure control harder, and the holes on the dizi are much larger than that of the recorder while having a larger inner bore diameter, characteristics that make it less sensitive than the recorder in using right-hand hole covering to force different standing wave modes. But having direct embouchure control on the dizi does make up for the differences---the recorder cannot be coaxed to overblow very high harmonics due to the fixed angle set by the windway and labium.

The recorder does have a more dainty feel to it compared to the one-key flute (and it definitely is much more dainty than the dizi, though it is possible to play the dizi that way with enough training).